Accountable AI: Building Trust in the Age of AI Agents

AI is transforming industries, powering everything from personalized marketing to autonomous vehicles. But as AI systems become more influential in our daily lives, the call for accountability grows louder. What does it mean for AI to be “accountable,” and why does it matter?

In this blog post, we aim to explore what being accountable in AI really means and how Patsnap plays a key role in this area.

What Is Accountable AI?

According to Kevin Werbach, Professor at Wharton Business School, Accountable AI means implementing technical, operational, and legal mechanisms to ensure that AI is appropriately governed, and to promote responsible, safe and trustworthy uses.

Why Is Accountability Important?

We look at several areas which articulate why accountability is crucial.

- Trust: Users and stakeholders need to trust that AI systems are fair, reliable, and safe.

- Ethics: AI can amplify biases or make opaque decisions. Accountability ensures ethical standards are upheld.

- Regulation: Governments and industries are introducing regulations (like the EU AI Act) that require organizations to demonstrate responsible AI practices.

- Risk Management: Accountable AI helps organizations identify, mitigate, and respond to risks in legal, reputation, and operations.

Key Principles of Accountable AI

Accountable AI ensures that systems are not only effective but also ethical, transparent, and trustworthy. AI systems should be explainable, and users should be able to understand the rationale behind how and why decisions are made.

AI doesn’t operate in a vacuum. Behind every algorithm is a team of developers, data scientists, and decision-makers. Responsibility means there must be clear ownership of the AI system and its outcomes. Whether it’s a misdiagnosis in healthcare or bias in hiring algorithms, someone must be answerable and empowered to take corrective action. AI systems learn from data and they can reflect societal biases. Without safeguards, AI can reinforce discrimination in areas like hiring, lending, or policing. Fairness means actively designing systems to detect and mitigate bias.

Human-in-the-Loop: How Patsnap Builds Accountable AI and Puts You in Control

In the race to adopt AI, speed often steals the spotlight. But at Patsnap, we believe that true innovation isn’t just about moving faster, but it’s about thinking deeper, acting smarter, and staying in control.

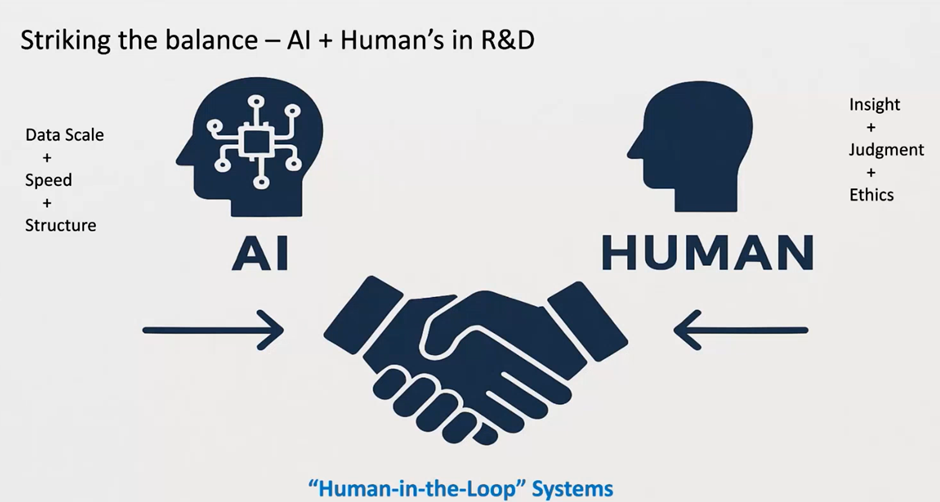

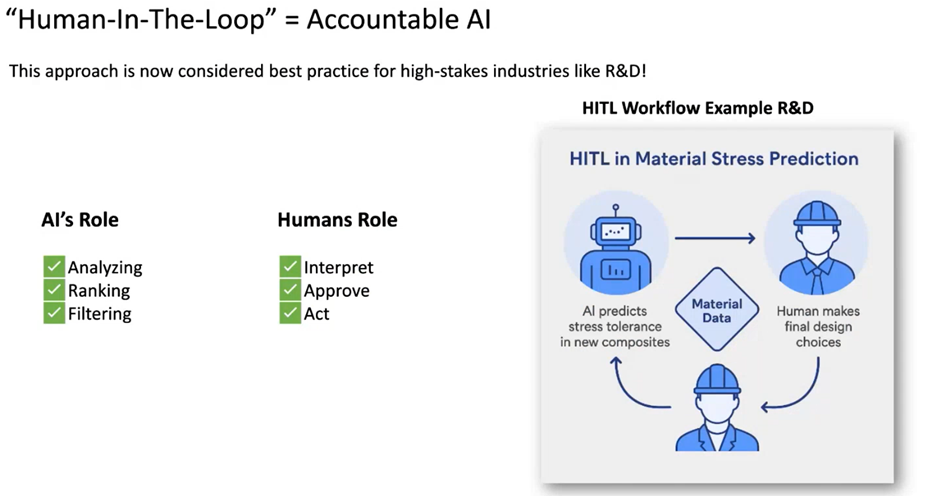

That’s why we champion the Human-in-the-Loop principle. It’s not just a technical framework, it’s a mindset. One that ensures AI works with you, not instead of you.

Human-in-the-Loop means that you remain at the center of every decision, every insight, and every action. AI may generate potential answers, but it’s your domain expertise, intuition, and judgment that validate those results and determine what happens next.

At Patsnap, this principle guides how we design our tools and workflows. From reviewing inputs, interpreting outputs and validating results, you have absolute control. This ensures that AI becomes a thought partner, not a black box because we believe AI should augment human intelligence and not replace it.

In a world where AI is increasingly making decisions that affect businesses, markets, and lives, responsibility matters. Human-in-the-loop ensures transparent, accountability and creates trust. This approach aligns with our broader commitment to Accountable AI where fairness, auditability, and remediation are built into every system.

Looking Ahead

Accountable AI isn’t just a technical challenge, it’s a cultural and organizational one. As AI continues to evolve, companies that prioritize accountability will not only comply with regulations but also build stronger, more trusted relationships with their customers and communities.